Goal: A new approach to describe Free Viewpoint Video data as time-varying 3D mesh sequence with associated video textures and efficient coding of the data.

View-Dependent Multi-Texture Scene Reconstruction

Free viewpoint video represents a new technology for representation of moving 3D scene content. It allows the user to watch the data from any viewpoint and thus provides direct interaction with the scene instead of passive video consumption. Due to increasing transmission bandwidth as well as consumer device capabilities, Free Viewpoint Video becomes feasible in a number of applications, e.g. 3D TV broadcast, virtual and augmented guided tours through museums, towns, other places of interest or user-assisted guidance.

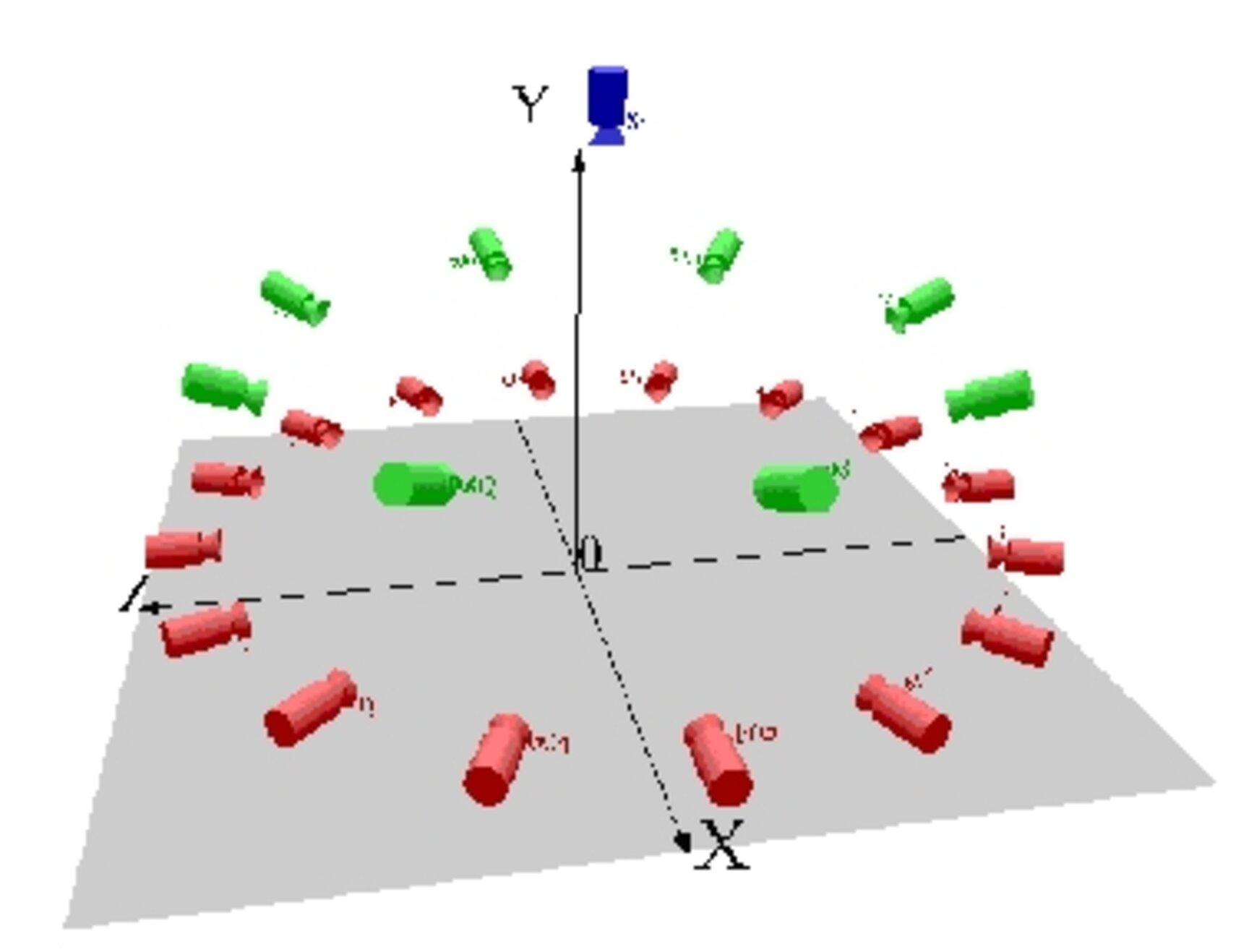

Free viewpoint video construction typically relies on a multi-camera setup as shown in Fig. 1. In general, the quality of the rendered views increases with the number of available cameras. However, equipment costs and often the complexity costs required for processing increase as well. We therefore consider a classical tradeoff between quality and costs by limiting the number of cameras and compensating this by geometry extraction.

Geometry Reconstruction

The first step of our algorithm consists of deriving intrinsic and extrinsic parameters for all cameras that relate the 2D images to a 3D world coordinate system as our geometry extraction and rendering algorithms require knowledge of these parameters. These parameters are computed from reference points using a standard calibration algorithm. In the next step, the object to be extracted is segmented in all camera views. For that we use the combination of an adaptive background subtraction algorithm and Kalman filter tracking. The results of this step are silhouette videos that indicate the object’s contour for all cameras. The 3D volume containing the object is reconstructed from the silhouette images using an octree-based shape-from-silhouette algorithm. The process starts by placing a cube into the virtual 3D world that ideally represents the 3D bounding cube of the object. The size and position of the cube are obtained by projecting the 2D bounding boxes of all views into 3D space and analyzing the resulting intersections in 3D space. This initial cube of the octree, which is referred as level 0, is subdivided into 8 octants each of which being a cube itself. For each octant one of the following actions is taken:

- A cube that is completely inside the silhouettes of all views is not subdivided further, i.e. it is completely inside the object to be reconstructed,

- A cube that is completely outside of at least one silhouette is omitted, i.e. it is outside the object,

- A cube that does not fall into one of the above two categories is further subdivided.

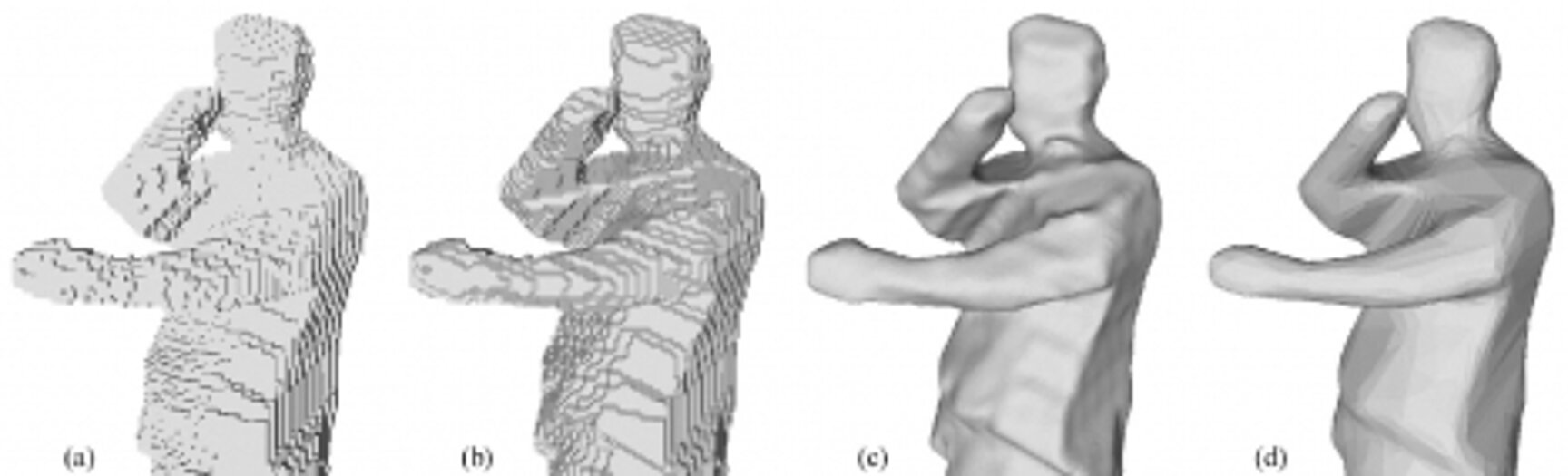

This approach is recursively applied to all octants, until a contour approximation with a particular accuracy in each view is achieved. Illustratively, the method of shape-from-silhouette can be compared to a carving process or the way a sculptor builds a figure from a block of marble. After visual hull segmentation the object’s surface is extracted from the voxel model by applying a marching cubes algorithm and representing it by a 3D mesh. Then the object’s surface is smoothed applying a first order neighborhood smoothing. Finally the number of surface triangles is drastically reduced using a standard edge-collapsing algorithm, which mainly analyzes normal vectors of adjacent faces. The transformation steps from the octree model to the reduced wireframe surface model are shown in Fig. 2.

Texture Mapping

For photo-realistic rendering, the original videos are mapped onto the reconstructed geometry. Natural materials may appear very different from different viewing directions depending on their reflectance properties and the lighting conditions. Static texturing (e.g. interpolating the available views) therefore often leads to poor rendering results, the so-called "painted shoebox" effect. We have therefore developed a view-dependent texture mapping that more closely approximates natural appearance when navigating through the scene. As illustrated in Fig. 3, the textures are projected onto the geometry using the calibration information. For each projected texture a normal vector ni is defined pointing into the direction of the original camera. For generation of a virtual view into a certain direction vVIEW a weight is calculated for each texture, which depends on the angle between vVIEW and ni. The weighted interpolation ensures that at original camera positions the virtual view is exactly the original view. The closer the virtual viewpoint is to an original camera position the greater the influence of the corresponding texture on the virtual view.

Scene Representation and 3D Mesh Coding

For a widespread use of 3D video objects in interactive applications, the scene description needs to be standard conform. Since MPEG-4 already provides a number of functionalities for synthetic 3D objects, we used MPEG-4 SNHC elements for geometry and texture description. Furthermore, the developed view-dependent multi-texturing was integrated into MPEG-4 AFX, so that the entire scene description is done in MPEG-4. For transmission purposes, the different parts of the developed scene description have to be coded. Here, we investigated available technology and implemented a 3D mesh coding scheme for compression of the object geometry.

Scene Representation

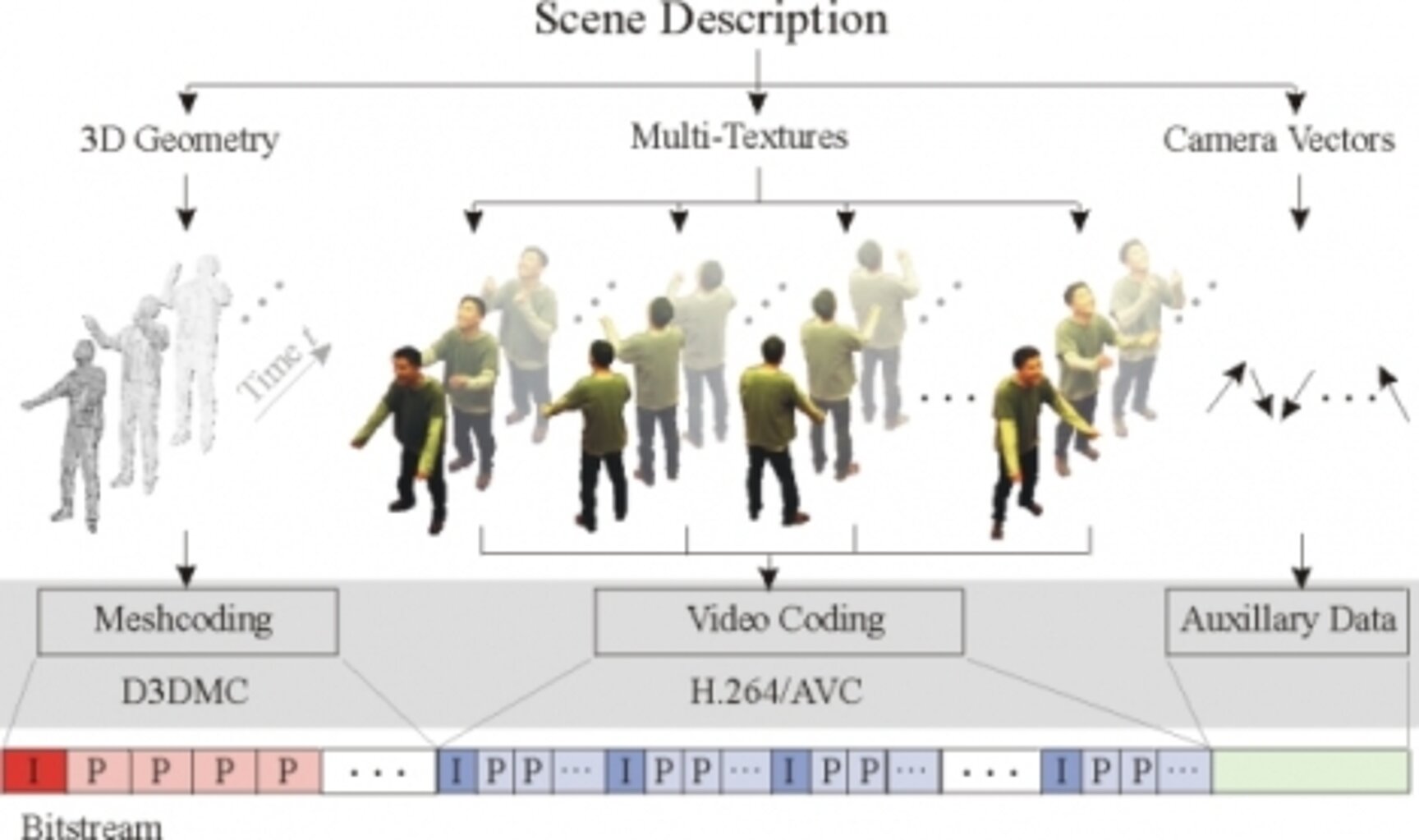

If a 3D video object has been constructed from a number of original cameras, its 3D geometry and texture information from all cameras can be assembled into a standardized scene description, as shown in Fig.4.

Here, the scene description contains the geometry information as a mesh or wireframe sequence with single meshes for each time instance. Furthermore, a number of original textures is added together with the associated camera vectors to enable view dependent object rendering. All components are described by the MultiTexture node that was integrated into MPEG-4 AFX to provide standard conformity. Fig.4 also shows the underlying geometry with D3DMC as newly developed mesh coder and the state-of-the-art video codec H.264/AVC for efficient video coding.

3D Mesh Coding

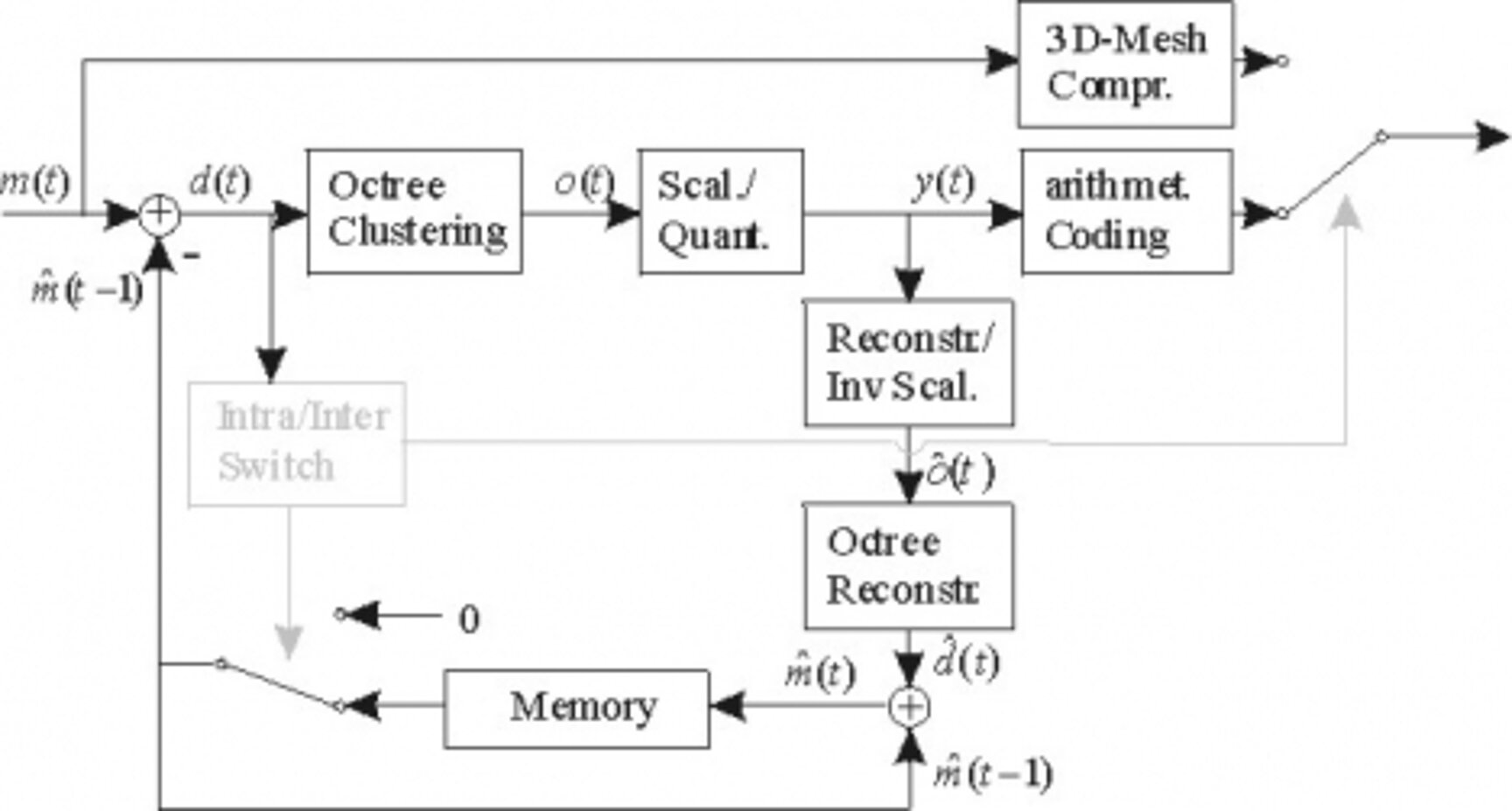

In the developed scene description, the geometry consists of a mesh sequence with time intervals of constant mesh topology, if the object’s 3D motion is limited. The number of meshes of such time intervals are grouped as groups-of-meshes (GOMs) with constant wireframe connectivity. To exploit the spatial and temporal coherences in such GOMs, we developed a mesh predictive mesh coding structure, and named it D3DMC (differential 3D mesh coding).

Fig. 5 shows a block diagram of the encoder. It contains MPEG-4 3DMC as fallback mode that is enabled through the Intra/Inter switch that is fixed to either one per 3D mesh. This Intra mode is used for instance when the first mesh of a GOM is encoded, i.e. when no prediction from previously transmitted meshes is used. Additionally, the Intra mode can also be assigned by the encoder control in any other case, i.e. if the prediction error is too large. This fallback modus provides backwards compatibility to 3DMC and ensures that the new algorithm can never be worse than the state-of-the-art. The new predictive mode is a classical DPCM-loop with arithmetic coding of the residuals. First the previous decoded mesh is subtracted from the current mesh to be encoded. This step can only be done if time-consistent meshes with the same connectivity are available, and therefore we have constrained the mesh extraction process as described above. In the next step, a spatial clustering algorithm is applied to the difference vectors, in order to compute one representative for a number of vectors. Finally the residual signal is passed to an arithmetic coder for further lossless compression.

Publications in Journals

2006

- Peter Eisert, Peter Kauff, Aljoscha Smolic, and Ralf Schäfer:

Free View Point Video - der nächste Schritt in der Unterhaltungselektronik,

FKTG, May 2006.

2005

- Aljoscha Smolic and Peter Kauff:

Interactive 3D Video Representation and Coding Technologies,

Proc. of the IEEE, Special Issue on Advances in Video Coding and Delivery, Vol. 93, No. 1, pp. 98-110, January 2005.

2004

- Aljoscha Smolic and David McCutchen:

3DAV Exploration of Video-Based Rendering Technology in MPEG,

IEEE Transactions on Circuits and Systems for Video Technology, Special Issue on Immersive Communications, Vol. 14, No. 9, pp. 348-356, January 2004.

Publications in Conference Proceedings

2006

- Karsten Müller, Aljoscha Smolic, M. Kautzner, and Thomas Wiegand:

Rate-Distortion Optimization in Dynamic Mesh Compression,

IEEE International Conference on Image Processing (ICIP'06), Atlanta, GA, USA, October 2006.

- Aljoscha Smolic, Karsten Mueller, Philipp Merkle, Christoph Fehn, Peter Kauff, Peter Eisert, and Thomas Wiegand:

3D Video and Free Viewpoint Video – Technologies, Applications and MPEG Standards,

IEEE International Conference on Multimedia and Expo (ICME'06), Toronto, Ontario, Canada, July 2006.

- Levent Onural, Thomas Sikora, Jörn Ostermann, Aljoscha Smolic, Reha Civanlar, and John Watson:

An Assessment of 3DTV Technologies,

National Association of Broadcasters Exhibition (NAB'06), Las Vegas, NA, USA, April 2006.

2005

- Aljoscha Smolic, Karsten Müller, Matthias Kautzner, Phillip Merkle, and Thomas Wiegand:

Predictive Compression of Dynamic 3D Meshes,

IEEE International Conference on Image Processing (ICIP'05), Genova, Italy, September 2005.

- Aljoscha Smolic, Karsten Müller, Philipp Merkle, Matthias Kautzner, and Thomas Wiegand:

3D Video Objects for Interactive Applications,

European Signal Processing Conference (EUSIPCO'05), Antalya, Turkey, September 2005, Invited Paper.

2004

- Karsten Müller, Aljoscha Smolic, Philipp Merkle, Matthias Kautzner, and Thomas Wiegand:

Coding of 3D Meshes and Video textures for 3D Video Objects,

Picture Coding Symposium (PCS'04), San Francisco, CA, USA, December 2004.

- Levent Onural, Aljoscha Smolic, and Thomas Sikora:

An Overview of a New European Consortium: Integrated Three-Dimensional Television - Capture, Transmission and Display (3DTV),

European Workshop on the Integration of Knowledge, Semantic, and Digital Media Technologies, London, UK, November 2004.

- Aljoscha Smolic, Karsten Müller, Philipp Merkle, Tobias Rein, Peter Eisert, and Thomas Wiegand:

Representation, Coding, and Rendering of 3D Video Objects with MPEG-4,

IEEE International Workshop on Multimedia Signal Processing (MMSP), Siena, Italy, October 2004.

- Aljoscha Smolic, Karsten Müller, Philipp Merkle, Tobias Rein, Peter Eisert, and Thomas Wiegand:

Free Viewpoint Video Extraction, Representation, Coding, and Rendering,

IEEE International Conference on Image Processing (ICIP'04), Singapore, October 2004.

- Karsten Müller, Aljoscha Smolic, Philipp Merkle, Birgit Kaspar, Peter Eisert, and Thomas Wiegand:

3D Reconstruction of Natural Scenes with View-adaptive Multitexturing,

2nd International Symposium on 3D Data Processing Visualization and Transmission, Thessaloniki, Greece, September 2004.

- Karsten Müller, Aljoscha Smolic, Birgit Kaspar, Philipp Merkle, Tobias Rein, Peter Eisert, and Thomas Wiegand:

Octree Voxel Modeling with Multi-view Texturing in Cultural Heritage Scenarios,

International Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS'04), Lisbon, Portugal, April 2004.

2003

- Aljoscha Smolic and David Mc Cutchen:

3DAV - Video-based Rendering for Interactive TV Applications,

ITG-Fachtagung Dortmunder Fernsehseminar, Dortmund, Germany, September 2003.

- Karsten Müller, Aljoscha Smolic, Michael Droese, Patrick Voigt, and Thomas Wiegand:

3D Modeling of Traffic Scenes using Multi-texture Surfaces,

Picture Coding Symposium (PCS'03), St. Malo, France, April 2003.

- Aljoscha Smolic and David McCutchen:

Efficient Representation and Coding of Omni-directional Video using MPEG-4,

International Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS'03), London, UK, April 2003.

Contributions to Standardization

2006

- Arnaud Bourge, Fons Bruls, Jan van der Meer, Yann Picard, Chris Varekamp, Aljoscha Smolic, Thomas Wiegand, Martin Borchert, and Martin Beck:

Applications, Requirements and Technical Solution for the Encoding of Depth and Parallax Maps,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Bangkok, Thailand, MPEG06/M12942, January 2006.

2005

- Matthias Kautzner, Karsten Müller, Aljoscha Smolic, Philipp Merkle, and Thomas Wiegand:

Results on Core Experiment E1, Mesh Compression Framework,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Hong Kong, China, MPEG05/M11699, January 2005.

- Matthias Kautzner, Karsten Müller, Aljoscha Smolic, and Thomas Wiegand:

Results on EE1: 3D Mesh Compression Framework,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Busan, Korea, MPEG05/M11982, April 2005.

- Matthias Kautzner, Karsten Müller, Aljoscha Smolic, and Thomas Wiegand: Preliminary Results on EE1 for D3DMC, MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Poznan, Poland, MPEG05/M12300, July 2005.

- Matthias Kautzner, Karsten Müller, Aljoscha Smolic, and Thomas Wiegand:

Results on EE1 for D3DMC,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Nice, France, MPEG05/M12594, October 2005.

2004

- Aljoscha Smolic, Karsten Müller, Matthias Kautzner, Phillip Merkle, and Thomas Wiegand:

Technical description of the HHI proposal for SVC CE1,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Palma de Mallorca, Spain, MPEG04/M11244, October 2004.

- Aljoscha Smolic, Karsten Müller, Matthias Kautzner, Phillip Merkle, and Thomas Wiegand:

Predictive Compression of Dynamic 3D Meshes,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Palma de Mallorca, Spain, MPEG04/M11240, October 2004.

- Aljoscha Smolic and Hideaki Kimata:

AHG on 3DAV Coding,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Palma de Mallorca, Spain, MPEG04/M11161, October 2004.

- Stephan Würmlin, Michael Waschbüsch, Edouard Lamboray, Peter Kaufmann, Aljoscha Smolic, and Markus Gross:

Image-space Free-viewpoint Video,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Redmond, USA, MPEG04/M10894, July 2004.

- Karsten Müller and Aljoscha Smolic:

View-Dependent Multi-Texturing for MPEG-4 AFX, Syntax and Semantics Specification Update,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Redmond, USA, MPEG04/M11031, July 2004.

- Aljoscha Smolic and Hideaki Kimata:

AHG on 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Redmond, USA, MPEG04/M10795, July 2004.

- Aljoscha Smolic, Sebastian Heymann, Karsten Müller, and Yong Guo:

Demonstration of a MPEG-4 Compliant System for Omni-directional Video,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Munich, Germany, MPEG04/M10674, March 2004.

- Aljoscha Smolic:

Response to the Call for Comments on 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Munich, Germany, MPEG04/M10673, March 2004.

- Aljoscha Smolic and Hideaki Kimata:

AHG on 3DAV Coding,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Munich, Germany, MPEG04/M10479, March 2004.

- Aljoscha Smolic, Karsten Müller, Philipp Merkle, Tobias Rein, Yuri Vatis, Peter Eisert, and Thomas Wiegand:

Results for EE2 on Model Reconstruction Free Viewpoint Video,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Munich, Germany, MPEG04/M10676, March 2004.

2003

- Karsten Müller, Aljoscha Smolic, and Tobias Rein:

Preliminary Results on Core Experiments on View-Dependent Multi-Texturing for MPEG-4 AFX,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Waikoloa, HI, USA, MPEG03/M10344, December 2003.

- Aljoscha Smolic and Hideaki Kimata:

AHG on 3DAV Coding,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Waikoloa, HI, USA, MPEG03/M10218, December 2003.

- Aljoscha Smolic, Karsten Müller, and Philipp Merkle:

Preliminary Results on EE2 Using Octree Reconstuction and View-dependent Texture Mapping,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Waikoloa, HI, USA, MPEG03/M10343, December 2003.

- Aljoscha Smolic and Hideaki Kimata:

AHG on 3DAV Coding,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Trondheim, Norway, MPEG03/M9635, July 2003.

- Aljoscha Smolic and David McCutchen:

Requirement for Random Access and View-dependent Partial Decoding and Rendering in 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Trondheim, Norway, MPEG03/M9839, July 2003.

- Aljoscha Smolic, Karsten Müller, Michael Droese, Birgit Kaspar, Philipp Merkle, Peter Eisert, and Thomas Wiegand:

Multi-texture Surfaces for View-dependent Rendering in Free Viewpoint Video and Graphics, ISO/IEC JTC1/SC29/WG11,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Trondheim, Norway, MPEG03/M9837, July 2003.

- Christoph Fehn, Klaas Schüür, Peter Kauff, and Aljoscha Smolic: Meta-Data Requirements for EE4 in MPEG 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Pattaya, Thailand, MPEG03/M9559, April 2003.

- Aljoscha Smolic and Hideaki Kimata:

AHG on 3DAV Coding,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Pattaya, Thailand, MPEG03/M9371, April 2003.

- Christoph Fehn, Klaas Schüür, Peter Kauff, and Aljoscha Smolic:

Coding Results for EE4 in MPEG 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Pattaya, Thailand, MPEG03/M9561, April 2003.

2002

- Aljoscha Smolic and David McCutchen:

Requirement for very high resolution video in 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Awaji Island, Japan, MPEG02/M9256, December 2002.

- Christoph Fehn, Klaas Schüür, Ingo Feldmann, Peter Kauff, and Aljoscha Smolic:

Distribution of ATTEST Test Sequences for EE4 in MPEG 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Awaji Island, Japan, MPEG02/M9219, December 2002.

- Aljoscha Smolic and Ryozo Yamashita:

AHG on 3DAV Coding,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Awaji Island, Japan, MPEG02/M9097, December 2002.

- Christoph Fehn, Klaas Schüür, Ingo Feldmann, Peter Kauff, and Aljoscha Smolic:

Proposed experimental conditions for EE4 in MPEG 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Shanghai, China, MPEG02/M9016, October 2002.

- Aljoscha Smolic and Michael Droese:

Results of EE1 on Usage of 3D Mesh Objects for Omni-directional Video,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Shanghai, China, MPEG02/M8927, October 2002.

- Aljoscha Smolic and Ryozo Yamashita:

AHG on 3DAV Coding,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Shanghai, China, MPEG02/M8785, October 2002.

- David McCutchen and Aljoscha Smolic:

Spherical Efficiency in Global Photography Mapping,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Klagenfurt, Austria, MPEG02/M8678, July 2002.

- Karsten Müller and Aljoscha Smolic:

Study of 3D Coordinate System and Sensor Parameters for 3DAV,

MPEG Meeting - ISO/IEC JTC1/SC29/WG11, Klagenfurt, Austria, MPEG02/M8536, July 2002.

Exhibitions

2004

- Thomas Wiegand:

Augmented Reality and Free Viewpoint Video,

VDE-Kongress 2004, Berlin, Germany, October 2004.

- Thomas Wiegand:

3D IMedia - 3D Scene Reconstruction for Interactive Media,

CeBIT 2004, Hannover, Germany, March 2004.