Concept-based explanations improve our understanding of deep neural networks by communicating the driving factors of a prediction in terms of human-understandable concepts (see, e.g, CRP) [1]. However, investigating each and every sample for understanding the model behavior on the whole dataset can be a laborious task.

With PCX (Prototypical Concept-based eXplanations), we introduce a novel explainability method that enables a quicker and easier understanding of model (sub-)strategies on the whole dataset by summarizing similar concept-based explanations into prototypes.

For a model trained to discern dogs from cats, a prototype of the dog class could correspond to a specific dog breed present in a subpopulation of the training data.

Concretely, PCX allows to

- quickly understand model (sub-)strategies in detail on the concept level via prototypes

- spot spurious model behavior or data quality issues

- catch outlier predictions or assign valid predictions to prototypes

- identify missing and overused features in a prediction w.r.t. the prototypical behavior.

As such, PCX is taking important steps towards a holistic understanding of the model and more objective and applicable XAI.

PCX prototypes are computed by first generating concept-based explanations using, e.g., our CRP method, on the whole training set. For each sample and the corresponding model prediction outcome, we here receive relevance scores for the concepts used by the model. Having generated and collected all explanations, we continue with fitting a Gaussian Mixture Model (GMM) on the respective distributions for each output class. A single Gaussian distribution of a GMM then corresponds to one prototype that summarizes multiple similar explanations. To visualize prototypes, we show the closest sample to a Gaussian's mean in the training set as a prototypical example.

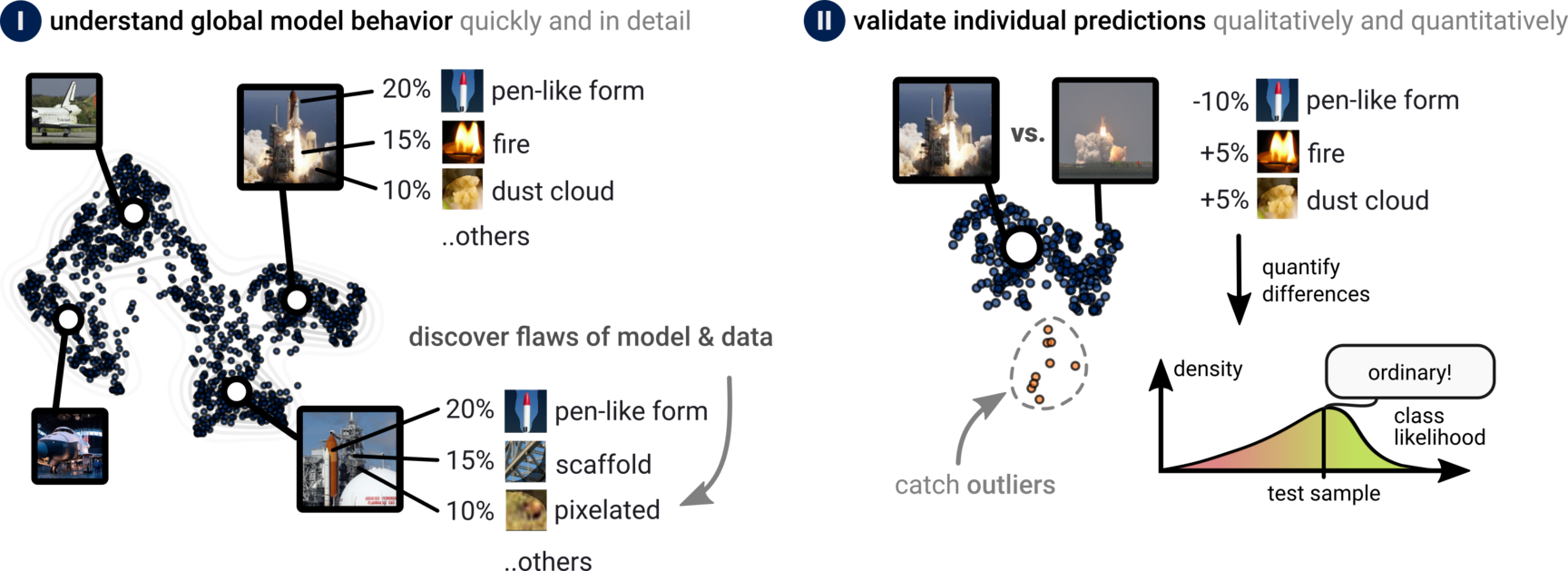

By inspecting the PCX prototypes together with the explanation distribution as in Fig. 1 (left), we get a quick idea of the prediction strategies the model has learned. In such a “strategy map’’, similar explanations are closely located. As our prototypes are based on concept-based explanations, we can now dive deeper and study the characteristic concepts of each prototype.

Further, during deployment, PCX allows for more objective explanations that are also practical for model (prediction) validation. Concretely, with PCX, we can understand for single predictions their (a-)typicality. On the one hand, PCX allows studying the difference to the expected model behavior qualitatively in terms of concepts. Notably, contrary to many other explainability methods, we can understand not only which features are present, but also missing w.r.t. prototypes, as illustrated in Fig. 1 (right). On the other hand, as prototypes are based on Gaussian distributions, the probability density function of a distribution allows for a quantitative likelihood measure. The less usual the concepts used for an output class, the lower the measured likelihood.

Concept-based prototypes of PCX allow for a detailed yet quick understanding of the decision-making processes of DNNs, requiring significantly less human effort compared to studying single prediction explanations. In [2], we showcase PCX and find sub-strategies and data quality issues/biases on public benchmark datasets. Notably, the identification of spurious behavior is a key step for ensuring trust and safety in AI. Further, PCX also opens up new possibilities for knowledge discovery in science applications.

References

| [1] | Reduan Achtibat, Maximilian Dreyer, Ilona Eisenbraun, Sebastian Bosse, Thomas Wiegand, Wojciech Samek, and Sebastian Lapuschkin. “From attribution maps to human-understandable explanations through Concept Relevance Propagation”. In: Nature Machine Intelligence 5.9 (Sept. 2023), pp. 1006–1019. ISSN: 2522-5839. DOI: 10.1038/s42256-023-00711-8. URL: https://doi.org/10.1038/s42256-023-00711-8. |

| [2] | Maximilian Dreyer, Reduan Achtibat, Wojciech Samek, and Sebastian Lapuschkin. “Understanding the (Extra-)Ordinary: Validating Deep Model Decisions with Prototypical Concept-based Explanations”. In: 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Proceedings. 2024 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. CVPRW (Seattle, Washington, United States of America, June 17–18, 2024). IEEE Computer Society. 3 Park Avenue, 17th Floor, New York City, New York, United States of America: Institute of Electrical and Electronics Engineers (IEEE), 2024, pp. 3491–3501. ISBN: 979-8-3503-6547-4. DOI: 10.1109/CVPRW63382.2024.00353. |